Success Stories > Ensuring timely data availability for real time, mission critical data

Monetizing broadcasting data

Ensuring timely data availability for real time, mission critical data

24 February 2023 | Noor Khan

Key Challenges

Our clients were facing regular delays in their reporting as a result of data delays and gaps. Dealing with real-time, mission-critical data, they required a supercharged ETL structure to considerably improve the data reporting speed.Key Details

Service

Data Engineering

Technology

Databricks, AWS Airflow, Spark, AWS S3, SFTP (Secure File Test Protocol)

Industry

Media

Sector

Media

Key results

- Significantly reduced data reporting time

- Parallel processing of multiple clusters

- High data reliability and availability

- Robust data security measures and practices

- Automated error resolving

A market leader, internationally renowned media and broadcasting company

Founded in 2002, our client has been around for over two decades and is an internationally known company dealing with broadcasting data for commercial use. With a mission of making high-quality technology and content affordable for everyone, they have established themselves as a market leader.

Date reporting delays

The client deal with real-time broadcasting and commercial data, and the data needs to be available in near real-time. Their existing ETL infrastructure built on AWS technologies such as Redshift was not able to meet their expectations and requirements. Our highly experienced engineers presented the solution of leveraging Databricks for the whole ETL process for the many benefits it offers including parallel processing with multiple clusters.

Immense real-time data

The client was dealing with considerably large datasets including the likes of 215 million records of commercial data being processed on an hourly basis, and 9 million records of content data being processed hourly. This data created eighty reports which need to be produced quickly and efficiently.

Parallel processing on multiple clusters with Databricks

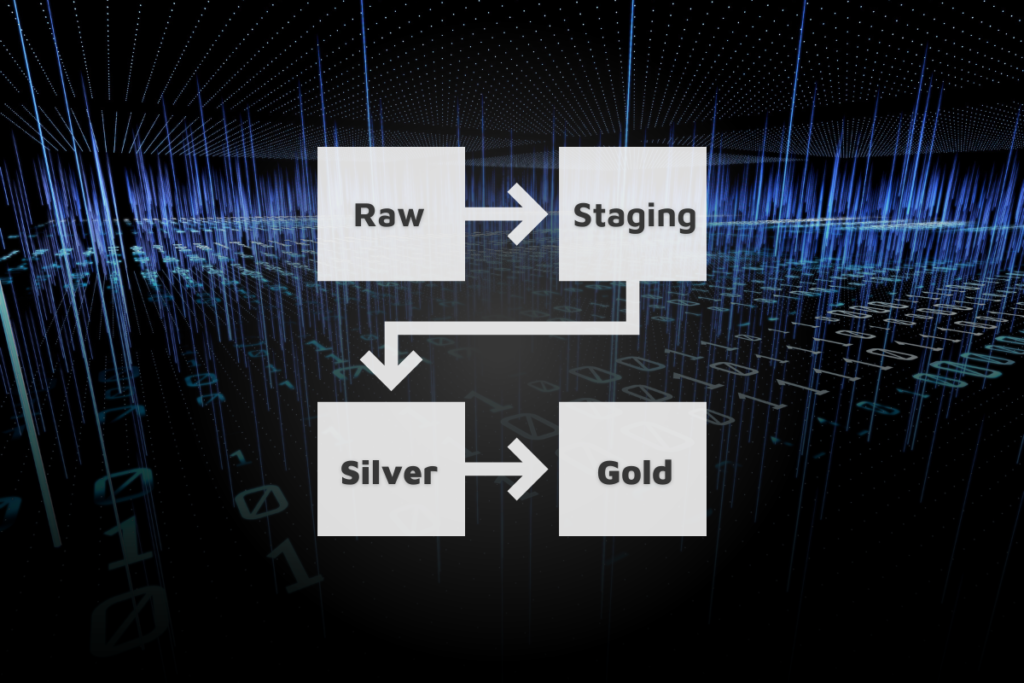

To significantly improve the speed of data availability and reporting, the client required a solution which would enable the parallel processing of multiple data clusters. The ETL pipeline infrastructure was built in line with customer requirements and the data flowed through multiple stages:

- Raw data, extracted from the source

- Staging

- Silver

- Gold

Each stage carried out the processing tasks such as de-duplication, validation and cleansing to ensure the data is loaded to the destination was clean, without gaps and delays.

Why Databricks?

Databricks is a brilliant technology used for ETL processes with user-friendly dashboards to track the entire process and spot any errors. Our highly skilled data engineering team are proficient in Databricks and has utilised it for many client projects, therefore they were able to make the recommendations to help the client overcome their challenges.

Automated error resolving and reporting

The solution was built with automated error resolution in place which is offered by Databricks. Our engineers were able to set a number of tries that the system would make before the error was reported for manual intervention. This helps the data engineering team ensure that there are no data drops and delays and errors can be resolved quickly and efficiently. There are two main errors that there is a potential for occurring and these include:

- Data drops – This would mean the batch processing is incomplete therefore the processing of that particular data batch would be repeated.

- Data gaps – In this case, there are gaps in the data which would require a backfill to when the error first occurred.

Ongoing operational monitoring and support of the ETL

Our client are thrilled with the high performance of the new and improved ETL infrastructure built on Databricks which is driving a new speed and efficiency for their reporting. Our expert data engineering continues to provide an ongoing operational monitoring and support service to the client to ensure data availability and accessibility at all times.

Ensuring timely data availability for real-time, mission-critical data with Ardent data engineering service

Ardents' team of highly skilled data engineers are proficient in world-leading data technologies and can make recommendations based on your unique needs and requirements. Whether you have a preferred tech stack or want expert guidance on the technologies right for your data and business, we can help. Are you facing any of these challenges:

- Delayed data reporting turnaround

- Data delays, gaps and dropouts

- Slow data performance and speed

If you are, get in touch today to find out how we can help you unlock the potential of your data.

More Success Stories

Success Story

Automating data collection with OCR technology

Market Research | Retail

Accelerating market research by automating data collection with OCR technology. [...]

Success Story

Making logistics simple

Logistics | Logistics, Software

Leader logistics software provider Our client is a leading logistics software provider in the UK. With over 3 decades of experience in the industry, they continuously look to innovate with technology. Their range of software products includes a warehouse management system and removal management software. They aim to remove the complexity of software and bring [...]

Success Story

Three decades of experience in delivering software excellence

Technology | Logistics, Software

Well-established logistics software provider Our client is a software products company providing software to the logistics industry and their main product was administration solution software for removal companies. With almost three decades of experience, our clients are leaders in the removals sector. Since the start, they have gone from strength to strength in becoming a [...]

Ardent Insights

Overcoming Data Administration Challenges, and Strategies for Effective Data Management

Businesses face significant challenges to continuously manage and optimise their databases, extract valuable information from them, and then to share and report the insights gained from ongoing analysis of the data. As data continues to grow exponentially, they must address key issues to unlock the full potential of their data asset across the whole business. [...]

Are you considering AI adoption? We summarise our learnings, do’s and don’ts from our engagements with leading clients.

How Ardent can help you prepare your data for AI success Data is at the core of any business striving to adopt AI. It has become the lifeblood of enterprises, powering insights and innovations that drive better decision making and competitive advantages. As the amount of data generated proliferates across many sectors, the allure of [...]

Why the Market Research sector is taking note of Databricks Data Lakehouse.

Overcoming Market Research Challenges For Market Research agencies, Organisations and Brands exploring insights across markets and customers, the traditional research model of bidding for a blend of large-scale qualitative and quantitative data collection processes is losing appeal to a more value-driven, granular, real-time targeted approach to understanding consumer behaviour, more regular insights engagement and more [...]